My thesis has officially passed committee and, after a final check-the-commas read-through, will be on its way to the external examiners.

December 09, 10:00, Democritus University of Thrace

If you are, or will be, in Xanthi, it would be great if you could join in person. If you are not, there will be a Skype conference call, with preference given to research participants who would like to listen in. Please email me to reserve a slot.

Abstract – Introduction

This chapter lists the goals and the contribution of the current thesis. The goals which were set up at the beginning of this work and which were adjusted during the process are:

-

The creation of a new family of descriptors which will combine more than one low levels feature in a compact vector, and which will have the ability to be incorporated in the pre-existing MPEG-7 standard. The descriptors will be constructed via intelligent techniques.

-

The creation of a method for accelerating the searching procedure.

-

The investigation of several Late Fusion methods for image retrieval.

-

The creation of methods which will allow the use of the proposed descriptors in distributed image databases.

-

The development of a software which will contain a great amount of descriptors proposed in the literature.

-

The development of open source libraries which will utilize the proposed descriptors as well as the MPEG-7 descriptors.

-

The creation of a new method for encrypting images which will utilize features and parameters from the image retrieval field.

-

The creation of a new method and system implementation which will employ the proposed descriptors in order to achieve video summarization.

-

The creation of a new method and system implementation for image retrieval based on``Keywords'' which will be automatically generated via the use of the proposed descriptors.

-

Finally, the creation of a new method and system implementation for multi-modal search. The system will utilize both low level elements(which will originate from the proposed descriptors) as well as high level elements (which will originate from keywords which will accompany the images).

Thesis Details

In the past few years there has been a rapid increase in the field of multi-media data, mostly due to the evolution of information technology. One of the main components of multi-media data is that of visual multi-media data, which includes digital images and video. While the issue of producing, compressing and propagating such media might have been a subject of scientific interest for a long time, in the past few years, exactly due to the increase in the range of data, a large part of the research was turned towards the management of retrieval of such materials.

The first steps in automated management and retrieval of visual multi-media, can be traced back to 1992, where the term Content Based Retrieval}was initially used. Since then, a new research field was created, which, approximately 20 years later, still remains active. And while initially this field of research seemed to be a research element classified under the general spectrum of information retrieval, as the years progressed, this research objective, has managed to attract scientists from various disciplines.

Even though there are a large number of scientists which occupy themselves with this field, no satisfactory and widely accredited solution to the problem has been proposed. The second Chapter of this thesis outlines a brief overview of the Fundamentals of Content-Based Image Retrieval.

During the course of this thesis, a study carried out that describes the most commonly used methods for retrieval evaluation and notes their weaknesses. It also proposes a new method of measuring the performance of retrieval systems and an extension of this method so that during the evaluation of retrieval results the parameters describing both the size of the database in

which the search is being executed as well as the size of the ground truth of each query are taken into account. The proposed method is generic and can be used for evaluating the retrieval performance of any type of information. This work is described in details in Chapter 3.

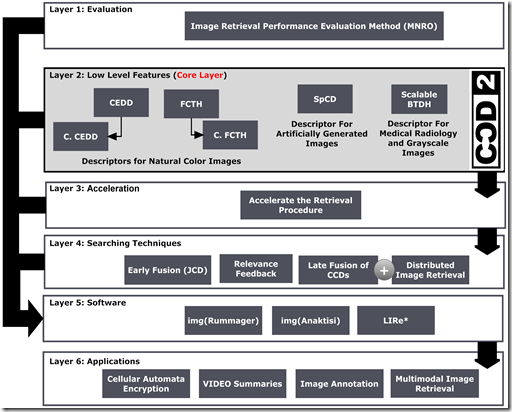

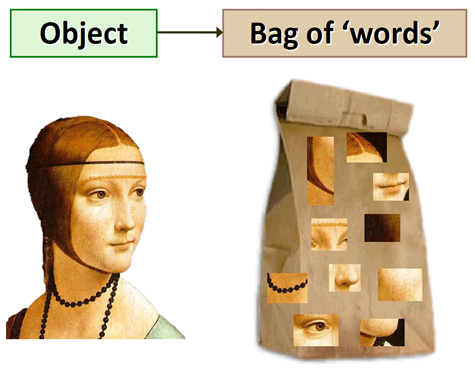

The core of the method proposed in this thesis is incorporated into the second thematic unit. This section includes a number of low level descriptors, whose features originate from the content of multi-media data which they describe. In contrast to MPEG-7, each type of multi-media data will be described by a specific group of descriptors. The type of material will be determined by the content it describes. The descriptors created originate from fuzzy methods and are characterized by their low storage requirements (23-72 bytes per image). Moreover, each descriptor combines the structure of more than one features (ie color and texture). This attribute classifies them as composite descriptors. The sum of descriptors which are incorporated into the second thematical unit of the thesis can be described by the general term Compact Composite Descriptors.

In its entirety, the second thematic unit of the thesis contains descriptors for the following types of multi-media material:

-

Category 1: Images/ Video with natural content

-

Category 2: Images/ Video with artificially generated content

-

Category 3: Images with medical content

For the description and retrieval of multi-media material with natural content, 4 descriptors were developed:

-

CEDD - Color and Edge Directivity Descriptor

-

C.CEDD - Compact Color and Edge Directivity Descriptor

-

FCTH - Fuzzy Color and Texture Histogram

-

C.FCTH - Compact Fuzzy Color and Texture Histogram

The CEDD includes texture information produced by the six-bin histogram of a fuzzy system that uses the five digital filters proposed by the MPEG-7 EHD. Additionally, for color information the CEDD uses a 24-bin color histogram produced by the 24-bin fuzzy-linking system. Overall, the final histogram has 6 X 24=144 regions.

The FCTH descriptor includes the texture information produced in the eight-bin histogram of a fuzzy system that uses the high frequency bands of the Haar wavelet transform. For color information, the descriptor uses a 24-bin color histogram produced by the 24-bin fuzzy-linking system. Overall, the final histogram includes 8 X 24=192 regions.

The method for producing the C.CEDD differs from the CEDD method only in the color unit. The C.CEDD uses a fuzzy ten-bin linking system instead of the fuzzy 24-bin linking system. Overall, the final histogram has only 6 X 10=60 regions. Compact CEDD is the smallest descriptor of the proposed set requiring less than 23 bytes per image.

The method for producing C.FCTH differs from the FCTH method only in the color unit. Like its C.CEDD counterpart, this descriptor uses only a fuzzy ten-bin linking system instead of the fuzzy 24-bin linking system. Overall, the final histogram includes only 8 X 10=80 regions.

To restrict the proposed descriptors' length, the normalized bin values of the descriptors are quantized for binary representation in a three bits/bin quantization.

Experiments conducted on several benchmarking image databases demonstrate the effectiveness of the proposed descriptors in outperforming the MPEG-7 Descriptors as well as other state-of-the-art descriptors from the literature. These descriptors are described in details in Chapter 5.

Chapter 6 describes the Spatial Color Distribution Descriptor (SpCD). This descriptor combines

color and spatial color distribution information. Since these descriptors capture the layout information of color features, they can be used for image retrieval by using hand-drawn sketch queries. In addition, the descriptors of this structure are considered to be suitable for colored graphics, since such images contain relatively small number of color and less texture regions than the natural color images. This descriptor uses a new fuzzy-linking system, that maps the colors of the image in a custom 8 colors palette.

The rapid advances made in the field of radiology, the increased frequency in which oncological diseases appear, as well as the demand for regular medical checks, led to the creation of a large database of radiology images in every hospital or medical center. There is now the imperative need to create an effective method for the indexing and retrieval of these images. Chapter 7 describes a new method of content based radiology medical image retrieval using the Brightness and Texture Directionality Histogram (BTDH). This descriptor uses brightness and texture characteristics as well as the spatial distribution of these characteristics in one compact 1D vector. The most important characteristic of the proposed descriptor is that its size adapts according to the storage capabilities of the application that is using it.

The requirements of the modern retrieval systems are not limited to the achievement of good retrieval results, but extend to their ability for quick results. The majority of the Internet users would accept a reduction in the accuracy of the results in order to save time from searching. The third thematic unit describes how the proposed descriptors may be modified, in order to achieve a faster retrieval from databases. Test results indicate that the developed descriptors are in a position to execute retrieval of approximately 100,000 images per second, regardless of dimensions. Details on the method developed are given in Chapter 8.

In Chapter 9 the procedure of early fusion of the two descriptors which describe visual multi-media material with natural content, is described. Given the fact that this category includes more than one descriptors, the procedure for combining these descriptors in order to further improve on the retrieval results, is analyzed.

The proposed descriptors are capable of describing images with a specific content. The descriptors developed for use with images with natural content cannot be used to retrieve grayscale medical images and vice versa. Due to this, the calculation of the efficiency of each descriptor was employed using image databases with homogenous content, suitable for the specific descriptor. However, the databases mostly used in the Internet are heterogeneous, and include images from every category. The fourth thematic unit of this thesis describes how late fusion techniques can be used to combine all the proposed descriptors, in order to achieve high retrievals scores in databases of this kind. Linear and non linear methods, which were adopted from the information retrieval field, have proven that the combination of descriptors yields very satisfactory results when used in heterogeneous data bases.

In the same, fourth, thematic unit a retrieval scenario from distributed image databases is considered. In this scenario, the user executes a search in multiple databases. However, it is possible that each database uses its own descriptor(s) for the images it contains. Adopting once more methods from the information retrieval field and combining them with a method developed in this thesis it is possible to achieve high retrieval scores. Details on the fusion methods, as well as the retrieval methods from distributed image databases, are given in Chapter 10.

Finally, the fourth thematic unit is completed by a relevance feedback algorithm. The aim of the proposed algorithm is to better readjust or even to alter the initial results of the retrieval, based on user preferences. During this process, the user selects from the retrieved results those images which are similar to his/her expected results. Information extracted from these images is in the sequel used to alter the descriptor of the query image. The method is described in Chapter 11.

The fifth part of the thesis describes the implementation of the four prior parts into free and open source software packages. During the course of the thesis, 3 software packages were developed:

Img(Rummager) was employed for the demonstration of the results of the research carried out in this thesis. In addition to the developed descriptors, the software implements a large number of descriptors from the literature (including the MPEG-7 descriptors), so that the application constitutes a platform for retrieving images via which the behavior of a number of descriptors can be studied. The application can evaluate the retrieval results, both via the use of the new image retrieval evaluation method as well as via MAP and ANMRR. The application was programmed using C# and is freely available via the ``Automatic Control, Systems and Robotics Laboratory'' webpage, Department of Electrical and Computer Engineering, Democritus University of Thrace.

Img(Anaktisi) was developed in collaboration with``Electrical Circuit Analysis Laboratory'', Department of Electrical and Computer Engineering, Democritus University of Thrace, and is an Internet based application which possesses the capability of executing image retrieval using the proposed in these thesis tools in a large number of images. The application is programmed in C\# .

Moreover, the proposed descriptors were included into the free and open source library, LiRE. This library is programmed in JAVA and includes implementations for the most important descriptors used for image retrieval. The program was developed in collaboration with the ALPEN-ADRIA University of Information Technology in Klagenfurtm Austria, Distributed Multimedia Systems Research Group (Ass. Prof. M. Lux). Details on the developed software are given in Chapter 12.

The sixth thematical unit of the thesis presents some of the applications which were developed via the use of the method which was employed during the thesis research. Initially, a system of image encryption was developed, which adopts methods from the field of image retrieval. The proposed method employs cellular automata and image descriptors in order to ensure the safe transfer of images and video. Chapter 13 describes the method.

In collaboration with ``Electrical Circuit Analysis Laboratory'', Department of Electrical and Computer Engineering, Democritus University of Thrace, an automated image annotation system was developed, using support vector machines. The combination of descriptors characterizes the image content with one or more words from a predetermined dictionary. Both the developed system, as well as the details regarding the method are given in Chapter 14.

In addition, a system which combines all the descriptors from the second thematical unit, as well as the fusion methods of the fourth thematic unit, was developed in order to create automated video summaries. The method utilized fuzzy classificators in order to create a summary in a predetermined class number, with the unique attribute of multiple participation of each frame in each class. Details are given in Chapter 15.

For the purposes of this thesis, an application was developed, which combines the proposed descriptors with high level features. Specifically, an application was developed which combines the visual content of 250,000 images from the Wikipedia, with the tags which accompany the images, as well as with the content of articles in which these images can be found. In reality, this problem is a fusion problem with multiple modalities and is described in Chapter 16.

The MPEG-7 standard proposed a structure via which visual-acoustic multi-media data bases are described. Each database is described via an XML file which contains the information for each image for a standardized format. This structure allows other applications to expand their structure by adding new fields. The first appendix of the thesis analyzes how the developed descriptors can be incorporated into the standardized MPEG-7 format.

MediaEval is a benchmarking initiative dedicated to evaluating new algorithms for multimedia access and retrieval. MediaEval focuses on speech, language and contextual aspects of video (geographical and social context). Typical tasks include, predicting tags for video, both user-assigned tags (Tagging Task) and geo-tags (Placing Task). We are daring enough to tackle "subjective" aspects of video (Affect Task).

MediaEval is a benchmarking initiative dedicated to evaluating new algorithms for multimedia access and retrieval. MediaEval focuses on speech, language and contextual aspects of video (geographical and social context). Typical tasks include, predicting tags for video, both user-assigned tags (Tagging Task) and geo-tags (Placing Task). We are daring enough to tackle "subjective" aspects of video (Affect Task).

You can improve you photos, easily, with just a few mouse clicks.

You can improve you photos, easily, with just a few mouse clicks.